EU Wants AI-Generated Content Labelled

In a recent press conference, the European Union said that to help tackle disinformation, it wants the major online platforms to label AI generated content.

The Challenge – AI Can Be Used To Generate And Spread Disinformation

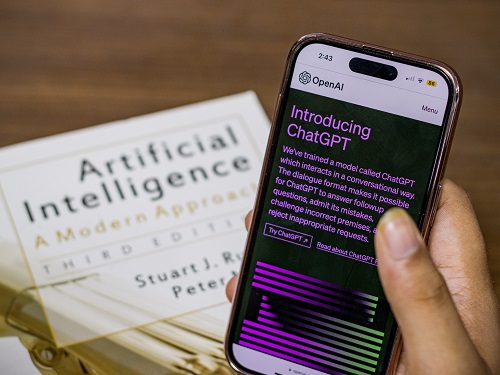

In the press conference, Vĕra Jourová (the vice-president in charge of values and transparency with the European Commission) outlined the challenge by saying, “Advanced chatbots like ChatGPT are capable of creating complex, seemingly well-substantiated content and visuals in a manner of seconds,” and that “image generators can create authentic-looking pictures of events that never occurred,” as well as “voice generation software” being able to “imitate the voice of a person based on a sample of a few seconds.”

Jourová Warned of widespread Russian disinformation in Central and Eastern Europe and said, “we have the main task to protect the freedom of speech, but when it comes to the AI production, I don’t see any right for the machines to have the freedom of speech.”

Labelling Needed Now

To help address this challenge, Jourová called for all 44 signatories of the European Union’s code of practice against disinformation to help users better identify AI-generated content. One key method she identified was for big tech platforms such as Google, Facebook (Meta), and Twitter to apply labels to any AI generated content to identify it as such. She suggested that this change should take place “immediately.”

Jourová said she had already spoken with Google’s CEO Sundar Pichai about how the technologies exist and are being worked on to enable the immediate detection and labelling AI-produced content for public awareness.

Twitter, Under Musk

Jourová also highlighted how, by withdrawing from the EU’s voluntary Code of Practice against disinformation back in May, Elon Musk’s Twitter had chosen confrontation and “the hard way, warning that, by leaving the code, Twitter had attracted a lot of attention,” and that “Its actions and compliance with EU law will be scrutinised vigorously and urgently.”

At the time, referring to the EU’s new and impending Digital Services Act, the EU’s Internal Market Commissioner, Thierry Breton, wrote on Twitter: “You can run but you can’t hide. Beyond voluntary commitments, fighting disinformation will be legal obligation under #DSA as of August 25. Our teams will be ready for enforcement”.

The DSA & The EU’s AI Act

Legislation, such as that referred to by Thierry Breton, is being introduced in the EU as a way to tackle the challenges posed by AI in the EU’s own way rather than relying on Californian laws. Impending AI legislation includes:

The Digital Services Act (DSA) which includes new rules requiring Big Tech platforms like Meta’s Facebook, Instagram and YouTube to assess and manage risks posed by their services, e.g. advocacy of hatred and the spread of disinformation. The DSA also has algorithmic transparency and accountability requirements to complement other EU AI regulatory efforts which are driving legislative proposals like the AI Act (see below) and the AI Liability Directive. The DSA directs companies, large online platforms and search engines to label manipulated images, audio, and video.

The EU’s proposed ‘AI Act’ described as “first law on AI by a major regulator anywhere” which assigns applications of AI to three risk categories. These categories are ‘unacceptable risk’, e.g. government-run social scoring of the type used in China (banned under the Act), ‘high-risk’ applications, e.g. a CV-scanning tool to rank job applicants (which will be subject to legal requirements), plus those applications not explicitly banned or listed as high-risk which are largely left unregulated.

What Does This Mean For Your Business?

Among the many emerging concerns about AI, there are the fears that the unregulated publishing of AI generated content could spread misinformation and disinformation (via deepfakes videos, photos, and voices) and in doing so, AI could erode truth and even threaten democracy. One method for enabling people to spot AI-generated content is to have it labelled (which the DSA seeks to do anyway) however the EC’s vice-president in charge of values and transparency with the EC sees this as being needed ungently, hence asking all 44 signatories of the European Union’s code of practice against disinformation to start labelling AI-produced content now.

Arguably, it’s unlike big tech companies to act voluntarily before regulations and legislation force them to and Twitter seems to have opted out already. The spread of Russian disinformation in Central and Eastern Europe is a good example of why labelling may be needed so urgently. That said, as Vĕra Jourová acknowledged herself, free speech needs to be protected too.

With AI generated content being so difficult to spot in many cases and with AI-generated content being published so quickly (in vast amounts), along with AI tools available to all for free, it’s difficult to see how the idea of labelling could be achievable or monitored/policed.

The requirement for big tech platforms like Google and Facebook to label AI-generated content could have significant implications for businesses and tech platforms alike. For example, primarily, labelling AI-generated content could be a way to foster more trust and transparency between businesses and consumers. By clearly distinguishing between content created by humans and that generated by AI, users would be empowered to make informed decisions. This labelling could help combat the spread of misinformation and enable individuals to navigate the digital realm with greater confidence.

However, businesses relying on AI-generated content must consider the impact of labelling on their brand reputation. If customers perceive AI-generated content as less reliable or less authentic, it could erode trust in the brand and deter engagement. Striking a balance between AI-generated and human-generated content would become crucial, potentially necessitating increased investments in human-generated content to maintain authenticity and credibility.

Also, labelling AI-generated content would bring attention to the issue of algorithmic bias. Bias in AI systems, if present, could become more noticeable when content is labelled as AI-generated. To address this concern, businesses would need to be proactive in mitigating biases and ensuring fairness in the AI systems used to generate content.

Looking at the implications for tech platforms, there may be considerable compliance costs associated with implementing and maintaining systems to accurately label AI-generated content. Such endeavours (if possible, to do successfully) would demand significant investments, including the development of algorithms or manual processes to effectively identify and label AI-generated content.

Labelling AI-generated content could also impact the user experience on tech platforms. Users might need to adjust to the presence of labels and potentially navigate through a blend of AI-generated and human-generated content in a different manner. This change could require tech platforms to rethink their user interface and design to accommodate these new labelling requirements.

Tech platforms would also need to ensure compliance with specific laws and regulations related to labelling AI-generated content. Failure to comply could result in legal consequences and reputational damage. Adhering to the guidelines set forth by governing bodies would be essential for tech platforms to maintain trust and credibility.

Finally, the introduction of labelling requirements could influence the innovation and development of AI technologies on tech platforms. Companies might find themselves investing more in AI systems that can generate content in ways that align with the labelling requirements. This, in turn, could steer the direction of AI research and development and shape the future trajectory of the technology.

The implications of labelling AI-generated content for businesses and tech platforms are, therefore, multifaceted. Businesses would need to adapt their content strategies, manage their brand reputation, and address algorithmic bias concerns. Tech platforms, on the other hand, would face compliance costs, the challenge of balancing user experience, and the need for innovation in line with labelling requirements. Navigating these implications would require adjustments, investments, and a careful consideration of user expectations and experiences in the evolving landscape of AI-generated content.

Sponsored

Ready to find out more?

Drop us a line today for a free quote!